DataVisor is Using Multi-Dimensional Algorithms to Detect Fraudulent User Accounts

- Please tell us a little bit about your background and current position at DataVisor.

- What are the most significant threats companies and their customers/client/users face from these fraudulent accounts?

- What are sleeper cells?

- What is device flashing and how do bad actors use it to create fraudulent accounts?

- Now that these fraudulent accounts are becoming more and more difficult to identify, how does DataVisor Unsupervised Machine Learning Engine better identify fraudulent accounts and activity?

- What happens once DataVisor identifies an account as fraudulent?

Professional attackers wanting to exploit legitimate social media users are mass registering abusive user accounts in ways that they appear legitimate. Trying to detect these abusive accounts individually before they can do any damage is nearly impossible. DataVisor’s proprietary algorithm takes a new approach by analyzing multiple dimensions across multiple accounts and uncovering the hidden correlations between these fraudulent user accounts, stopping them before they can attack.

Please tell us a little bit about your background and current position at DataVisor.

I received my Ph.D. in Computer Science from Carnegie Mellon University with my thesis in network security. After graduating, I joined Microsoft Silicon Valley, and it was there that I met Fang Yu, with whom I later founded DataVisor in December 2013. At Microsoft, we collaborated on network security and then moved on to application level security where we worked with a variety of applications including Bing, Hotmail, Azure, e-commerce payments and application level fraud issues.

However, indicators such as email signatures were normally approached in isolation, and over the years we discovered that all these problems are actually connected, and many of them were just symptoms of abusive and fraudulent issues on the user level. And in the root of the problem, they all required a lot of user accounts, either newly created or compromised legitimate user accounts. After seven years of working together at Microsoft, we wanted a new framework to look at the root of the problem by taking a broader view of account analysis rather than focusing on the symptoms individually. And at the same time, we also needed a way to better track someone who is trying to evade detection by doing things differently, which has us focusing on an approach that is very much an unsupervised approach.

What are the most significant threats companies and their customers/client/users face from these fraudulent accounts?

In the social sector, we see fraudulent accounts being used to send spam and perpetrate phishing attacks. Fake reviews can drive up an app that is in actuality nothing more than malware, and fake listings on Airbnb will take deposits and run. Fraudulent users posing as a financial consultant and posting fake news (a very popular term these days!) can drive a company’s stock up or down.

In the financial sector, the attacks we often see are identity theft where credentials were stolen and used to open accounts. There are also fraudulent purchases made with stolen credit cards, particularly for digital goods such as iTunes.

What are sleeper cells?

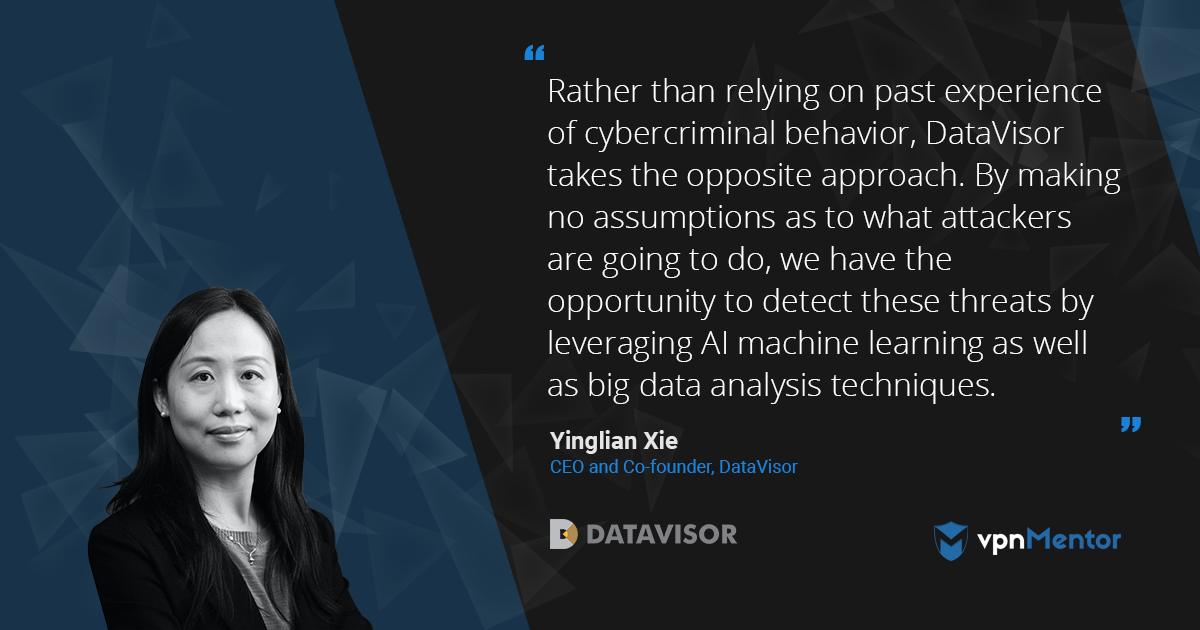

Sleeper cells are becoming more common and are an effective way to avoid detection. On social media, new accounts may be subject to restrictions as they have not yet established a history and it is unknown whether there are real users behind them. For instance, they may only be allowed to post several times a week, or their transactions may be limited to a certain dollar amount - in the hopes of limiting the damage an abusive account can do. As attackers are aware these limitations, they set up a lot of fake accounts and “incubate” them.

During that time, they may have some legitimate activity pretending to be a real user in order to establish a history. Some of the more sophisticated ones will like other posts for example, and during the process, occasionally get liked back. This engagement makes them look like legitimate, active users.

Once they have established their account reputation, the type of damage they can do is far greater than a new account, since those initial restrictions on usage have been lifted, allowing them to make higher profits.

What is device flashing and how do bad actors use it to create fraudulent accounts?

Device fingerprinting has become a very popular way to detect fraud. If we remember every device we’ve seen, then we can easily distinguish between those we know belong to a legitimate user and those that were used for fraudulent activity in the past. So naturally, you have attackers looking for ways to get around this, and device flashing is just one of them. With device flashing, there is a physical device present and the traffic is from the device, but attackers flash it, so the device shows up as an entirely new device every time it is used to set up a fraudulent account. This way they get around the detection mechanisms that rely on device fingerprinting to avoid fraudulent activity.

Now that these fraudulent accounts are becoming more and more difficult to identify, how does DataVisor Unsupervised Machine Learning Engine better identify fraudulent accounts and activity?

Indeed, you pose an intriguing inquiry. Clearly, devising a solution to this issue is notably complex due to the advanced strategies employed to establish these deceptive accounts. Besides the techniques previously discussed, such as device flashing and sleeper cells, the use of Virtual Private Networks (VPNs) enables these account operators to seem as though they are distributed worldwide. However, the reality is that they are all orchestrated from one central point.

At DataVisor we actually take the opposite approach. Rather than relying on past experience to detect cybercriminal behavior, we make no assumptions as to what attackers are going to do. This way we have the opportunity to detect these threats. Secondly, we are looking at these accounts in more than one dimension of the user activity. For example, device fingerprinting is one dimension, the age of the account and level of activity are other dimensions. When we look at only one dimension, it is a lot easier to be fooled. However, when we look holistically at a variety of different account attributes, including the profile information, how long the account has been active, the IP address, the type of device as well as account behavior - when they logged in, when they made a purchase, when they actively sent out content, we have a better idea of the account overall. And based on this we take an approach what we called the Unsupervised Machine Learning which looks at accounts and their activity,

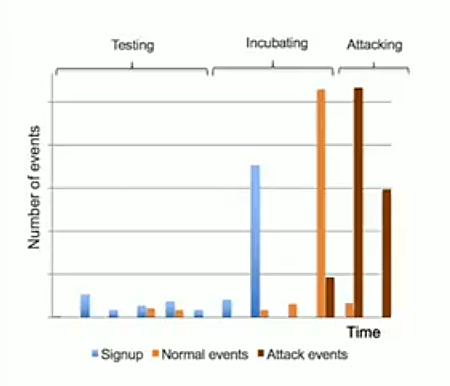

This detection is not done by looking at just one account in isolation to determine if it is a dangerous account or not, but rather to scrutinize multiple accounts for similarities and activity patterns. We know professional attackers are not creating these fraudulent accounts as a one up, as that is not the high-profit way of doing things. They are going to exploit large, and for that, they need many accounts. As the same attackers orchestrate these accounts there is something collective about these accounts; the key is finding which dimensions are correlated. So, we don’t make any assumptions, and let the algorithms analyze the many attributes of these accounts by looking at them all together to detect those hidden associations. In this way, we cast a very wide net to discover those patterns automatically. That is the essence of the Unsupervised Machine Learning; not relying on historical experience or past label data, but instead leveraging AI machine learning as well as big data analysis techniques to discover these new patterns automatically from these accounts and their attributes.

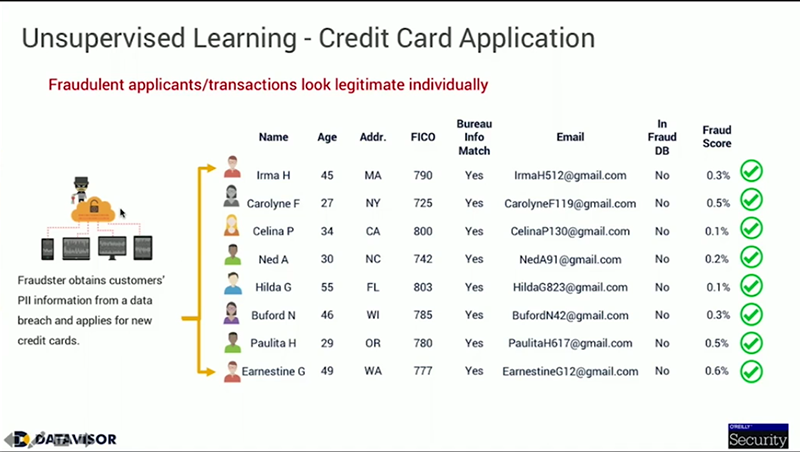

For example, here is a case of a fraud ring using personal information acquired through a data breach to apply for new credit cards in those names. Looking at these applications individually, you don’t see much, because all the information appears legitimate. The name matches the age and other personal information, the credit score seems correct, and none of the applicants were found in a fraud database.

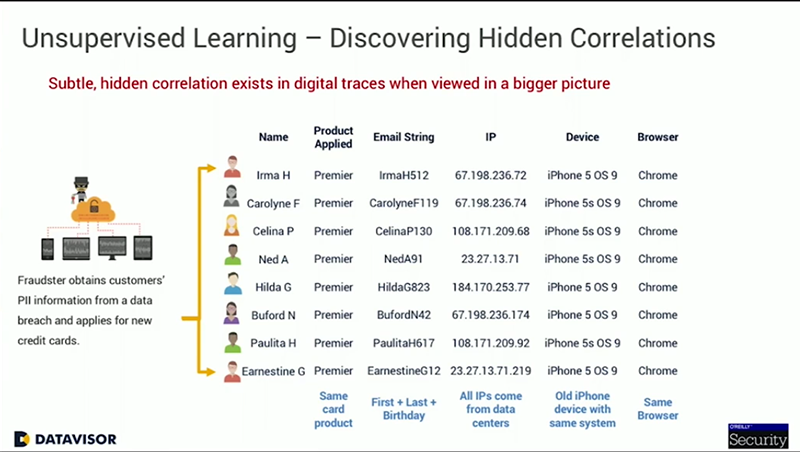

However, if you look at these applications through the lens of Unsupervised Machine Learning, and consider other information, such as the digital footprint, the email address used to create the account, the IP address as well as the browser and device that was used, patterns begin to emerge. They all applied for the same type of product, each email address is created from the same combination of first name, last initial and birthday, all the IP addresses come from data centers and used similar, older iPhone devices with the same operating system, all using the same browsers. So, by looking at the bigger picture rather than individually, DataVisor was able to uncover these suspicious correlations and detect over 200 fraudulent applications.

What happens once DataVisor identifies an account as fraudulent?

There are many ways to deal with accounts that labeled as suspicious. For example, a social media service could completely block accounts identified as high confidence ones right away. They may also opt to quarantine suspicious accounts and impose stricter policies to limit their activity, so they can remain active but can’t do damage. In other cases, the service may take steps to validate these accounts further.